Why 90% of AI Efforts Fail (and How to Ship Systems That Don't)

The hard truths about why 90% of AI efforts fail and the proven methodology for building AI systems that actually ship and scale in production.

Prasanna Hariram

8/22/20257 min read

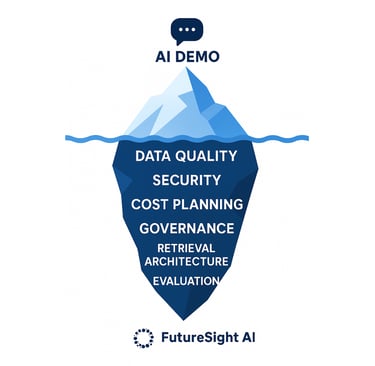

We love demos. You paste some text into a shiny chat box, a model hallucinates something plausible, and for a brief moment you glimpse the future. Then you try to ship it and… the demo gradient vanishes.

Instead of a moonshot, you get a slow bleed: pilots that never leave the lab, bills that don't match the slideware, brittle retrieval, compliance checklists, security incidents, and confused users.

If that sounds familiar, you're not alone. The last 18 months have been a global lesson that GenAI is not a model—it's a socio-technical system: data pipelines, retrieval, evaluations, policy, UX, ops.

The good news: there is a repeatable way to increase your odds.

The bad news: it's more plumbing than magic.

Let's be honest about the failure modes, then walk a path from demo to durable production.

The Anatomy of AI Project Failures

Before we dive into solutions, let's diagnose why most AI initiatives stall. Understanding these failure patterns is the first step toward avoiding them.

Pilot-to-Production Purgatory

The Problem:

Projects get stuck in endless pilot phases with no clear path to production.

Root Causes:

No designated business owner with P&L responsibility

Success metrics that sound good but don't tie to business outcomes

Integration costs that weren't factored into the original ROI calculations

No defined service level objectives (SLOs)

The Fix:

Assign a P&L owner from day one. Define a single, measurable success metric. Scope to one specific workflow. Most importantly, integrate into existing systems of record, not sandboxed environments.

The ROI Reality Check

The Problem:

Costs spiral beyond projections, destroying the business case.

Root Causes:

Expensive model inference costs at scale

Massive context windows driving up token usage

Hidden operational expenses: RAG infrastructure, evaluation systems, guardrails

The Fix:

Implement cost-aware orchestration with intelligent model routing. Cache aggressively. Use smaller, specialized models where possible. Most critically, price the entire system—not just token costs—before committing to scale.

Data Quality Quicksand

The Problem:

Retrieval systems return irrelevant, outdated, or contradictory information.

Root Causes:

Legacy knowledge bases that haven't been maintained

Naive document chunking strategies

Missing metadata and versioning

Siloed information across departments

The Fix:

Treat content lifecycle management as a first-class product requirement. Implement systematic curation and duplication. Add rich metadata and schema. Deploy hybrid retrieval combining BM25 and vector search with proper source attribution.

The Reliability Backlash

The Problem:

Users lose trust after experiencing hallucinations and overconfident responses.

Root Causes:

UX designs that present AI outputs as definitive answers

No uncertainty quantification or confidence scoring

Missing fallback mechanisms for edge cases

The Fix:

Design retrieval-first experiences with mandatory source citations. Build uncertainty awareness into the interface. Provide clear escalation paths to human experts for high-stakes decisions.

Governance Afterthoughts

The Problem: Regulatory compliance becomes a last-minute blocker to launch.

Root Causes:

Policy frameworks retrofitted after system design

Unclear risk classification for the use case

No documentation trail for audit purposes

The Fix: Classify your use case early using frameworks like the EU AI Act. Maintain comprehensive documentation, testing protocols, and incident response plans from the beginning. Scope down risk where necessary rather than fighting regulations.

Security Blind Spots

The Problem:

Prompt injection attacks, data leakage, and supply chain vulnerabilities emerge in production.

Root Causes:

Insufficient input validation and output filtering

Direct exposure of system prompts to user input

Inadequate threat modeling for AI-specific attack vectors

The Fix:

Implement secure RAG patterns with proper input isolation. Maintain allowlists for external data sources. Conduct regular red-team exercises and vulnerability assessments.

A Systematic Approach to AI Success

Now that we've identified the failure modes, let's walk through a proven methodology for building AI systems that actually ship and deliver value.

Phase 1: Foundation and Focus

1. Pick One Workflow and One KPI

The biggest mistake organizations make is trying to solve too many problems simultaneously. Choose a narrow, frequently occurring task—like support answer drafting for a specific product category. Assign a clear business owner who owns the P&L impact.

Define success as a measurable business delta: resolution time reduction, customer satisfaction improvement, or deflection rate increase. Avoid vanity metrics like "AI accuracy" that don't correlate with business outcomes.

2. Baseline Without AI

Before building anything with AI, measure today's performance using existing tools: templates, keyword search, or manual processes. If your AI system can't demonstrably outperform this baseline, you're not ready to scale.

This baseline becomes your minimum viable performance threshold and helps you calculate genuine ROI.

3. Make Data the Product

Your AI system is only as good as the data it retrieves. Invest in content curation as a first-class product capability:

Deduplicate and canonicalize: Remove redundant information and establish single sources of truth

Add rich metadata: Include source attribution, version numbers, effective dates, and access permissions

Establish content lifecycle: Define clear processes for publishing, updating, expiring, and archiving information

Quality gates: Implement review processes before content enters the retrieval corpus

Remember: garbage in equals confident nonsense out.

Phase 2: Architecture and Security

4. Design Retrieval Before Prompts

Retrieval-augmented generation should be your default architecture pattern. Build a robust retrieval system that combines:

Hybrid search: Lexical search (BM25) for exact matches plus vector search for semantic similarity

Intelligent chunking: Balance semantic coherence with structural document boundaries

Re-ranking and diversity: Ensure source diversity and relevance scoring

Version control: Track document versions and effective dates

Always ground AI responses with cited passages and source attribution.

5. Threat Model the Entire System

Security isn't an afterthought—it's a core architectural requirement. Enumerate potential risks:

Prompt injection and jailbreaking attempts

PII exfiltration through crafted queries

Supply chain vulnerabilities in external dependencies

Data poisoning through compromised sources

Implement defensive measures: input isolation, content filtering, output validation, and comprehensive logging for audit trails.

6. Choose Models with a Router, Not a Marriage

The AI landscape changes rapidly. Design a multi-model abstraction layer that routes requests based on:

Task complexity: Use smaller models for classification, larger ones for synthesis

Context requirements: Match context window needs to model capabilities

Latency and cost: Balance response time with computational expenses

Availability and reliability: Provide fallback options for model outages

Plan to evaluate and potentially swap models quarterly.

Phase 3: User Experience and Reliability

7. Engineer UX for Uncertainty

AI systems are probabilistic, and your user interface should reflect this reality:

Show sources by default: Make source attribution prominent, not hidden

Calibrate confidence: Display uncertainty when the system isn't sure

Provide control mechanisms: "Defer to human" and "show exact document" options

Staged deployment: Require human approval for high-stakes decisions, allow auto-approval for low-risk scenarios

8. Build Evaluation Infrastructure Early

Create comprehensive testing frameworks before you need them:

Golden datasets: Curate real-world examples with verified correct answers

Multi-dimensional metrics: Track usefulness, faithfulness, citation accuracy, latency, and cost

Policy compliance: Automated checks for regulatory and content policy violations

Regression testing: Automated test suites that run on every system change

Treat your evaluation code with the same rigor as your production code.

Phase 4: Operations and Optimization

9. Plan Total Cost of Ownership

Model inference is just one component of your total system cost. Include:

Retrieval infrastructure and database costs

Evaluation and testing system expenses

Guardrails and safety mechanism overhead

Human review and oversight time

Observability and monitoring tools

Set budget SLOs (e.g., <$0.03 per resolved customer query) and enforce them through routing policies, caching strategies, and context truncation.

10. Instrument Everything

Comprehensive observability enables continuous improvement:

Log comprehensively: Prompts, retrieval results, model versions, user actions, business outcomes

Build dashboards: Connect model behavior directly to business KPI movement

Enable experimentation: A/B testing framework for continuous optimization

Monitor drift: Track changes in user behavior, content performance, and model effectiveness

11. Governance as Enablement

Transform compliance from a gate into a feature that accelerates deployment:

Risk classification: Use established frameworks to categorize your use case

Documentation: Maintain model cards, system documentation, and data lineage

Testing protocols: Regular red-team exercises and performance evaluations

Incident response: Clear escalation procedures and rollback capabilities

Good governance documentation actually speeds approvals and reduces friction.

Phase 5: Human Integration and Scalability

12. Secure Production Deployment

Production security requires ongoing vigilance:

Source allowlists: Strictly control which external sources can be accessed

Content sanitization: Strip active content and validate all inputs/outputs

Periodic red-teaming: Regularly test your own system's vulnerabilities

Security as code: Version control security policies with proper review processes

13. Design for People and Process

Technology deployment without workflow redesign leads to user frustration:

User training: Provide concrete examples and failure mode education

Process integration: Redesign standard operating procedures around AI capabilities

Incentive alignment: Reward teams for business outcome improvement, not AI usage metrics

Change management: Support users through the transition with clear communication and support

14. Avoid Vendor Lock-in by Design

Maintain strategic flexibility in a rapidly evolving market:

Abstract dependencies: Keep prompts, evaluations, and retrieval logic vendor-independent

Plan for portability: Store embeddings with re-embedding strategies documented

Maintain swap readiness: Document migration procedures for your top model alternatives

Control your data: Ensure you can extract and migrate your data at any time

15. Manage IP and Data Provenance

Intellectual property considerations should guide your architectural decisions:

Prefer retrieval over training: Use RAG for proprietary knowledge instead of fine-tuning

Audit training data: When fine-tuning is necessary, use only licensed data with clear provenance

Track lineage end-to-end: Maintain comprehensive records of data sources and transformations

Expose provenance: Make data lineage visible in user-facing citations

Phase 6: Production Readiness

16. Define Clear Readiness Gates

Resist the urge to "vibe ship." Establish concrete criteria for production deployment:

Business validation: Demonstrated KPI improvement in controlled A/B tests

Technical performance: Evaluation benchmarks consistently met

Security approval: Comprehensive security review and penetration testing

Cost validation: Total cost of ownership within approved budget parameters

Operational readiness: On-call procedures, monitoring, and rollback plans documented

Gradual rollout plan: Staged deployment with measurement at each phase

The Path Forward: Systems Thinking Over Model Worship

The pattern behind the 90% failure rate isn't that AI "doesn't work." It's that organizations try to ship a model where they actually need to ship a system.

The winning teams understand this distinction. They're methodical in the best way: they choose focused problems, invest in data quality, design retrieval before generation, measure what matters, and keep humans engaged where judgment is required.

They design for cost control, security, and technological change from day one. They treat AI vendors and models as tools in their toolkit, not as foundational dependencies or objects of faith.

If the demo is the spark that ignites possibility, the system is the engine that delivers sustained value. The magic isn't in the model—it's in the disciplined engineering that surrounds it.

Build the engine. Everything else compounds from there.

Ready to move beyond demos to production AI systems? Our team specializes in helping organizations navigate the journey from prototype to durable, business-critical AI applications. Contact us to discuss your specific challenges and requirements.